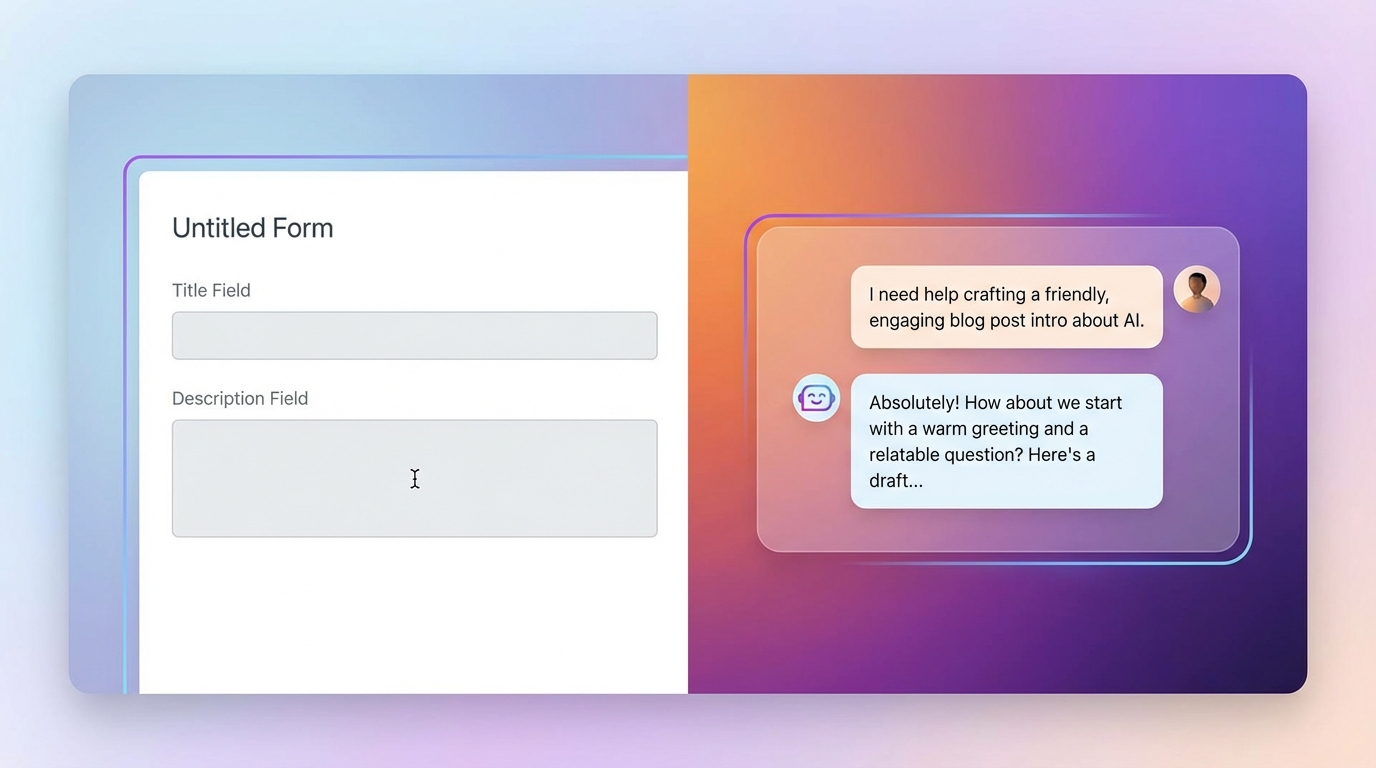

Conversational AI vs. Forms: Why Natural Dialogue Gets Better Feedback

Compare traditional feedback forms with AI conversational interfaces. Discover how dialogue-based feedback collection uncovers user motivation, context, and workarounds that static forms miss entirely.

Summary

Traditional feedback forms collect structured data but miss the story behind user requests. Conversational AI interfaces use natural dialogue to uncover motivation, context, and workarounds that static fields cannot capture. This article compares both approaches with real examples showing how the same feature request yields dramatically different insights.

The Problem with Traditional Forms

Every product team has experienced it: a feedback form submission that reads "Add dark mode" or "Make it faster." The request exists, but the context is missing.

Traditional feedback forms typically include:

- Title field: A brief label for the request

- Description field: Open text for explanation

- Category dropdown: Bug, feature, improvement

- Priority selector: Low, medium, high

Users submit these forms quickly. They assume the product team understands their situation. They don't realize how much context remains trapped in their heads.

What Forms Actually Capture

| Field | Typical Response | What's Missing |

|---|---|---|

| Title | "Dark mode" | Why? When? For whom? |

| Description | "Please add dark mode to the app" | Situation, motivation, alternatives |

| Category | Feature Request | Urgency, impact on workflow |

| Priority | High | Relative to what? Based on what criteria? |

The form creates a ticket. The ticket lacks meaning.

How Conversational AI Changes Everything

Conversational AI approaches feedback differently. Instead of presenting empty fields, it engages users in dialogue. An empathetic AI persona asks questions based on responses, follows threads of interest, and extracts structured data from natural conversation.

The Jobs-to-Be-Done Framework

Modern conversational feedback systems extract information aligned with the Jobs-to-Be-Done (JTBD) framework:

- Situation: When and where does this need arise?

- Motivation: Why do they want this? What outcome matters?

- Workaround: How do they currently handle this?

- Friction: What's frustrating about the current approach?

This framework reveals not what users want, but why they want it. Product teams can then solve the underlying problem rather than implementing the surface request.

Side-by-Side: The Same Request, Two Approaches

Let's examine how the same user feedback appears through both collection methods.

Traditional Form Submission

Title: Dark mode option

Description: Would be great if you could add a dark mode.

I work late sometimes and the bright screen hurts my eyes.

Category: Feature Request

Priority: Medium

Total context: 23 words. The product team knows the user wants dark mode for late-night work. That's it.

Conversational AI Exchange

AI: Hi! Thanks for reaching out. I'd love to hear what's on your mind regarding our product.

User: Yeah, I really wish you had dark mode.

AI: Got it, dark mode would help you out. Can you tell me a bit about when you typically find yourself wanting this? Is it during specific situations or times?

User: Mostly at night. I work on projects after my kids go to bed, usually from like 9pm to midnight. The white background is really harsh.

AI: That makes sense. Working late after the kids are asleep and dealing with a harsh bright screen sounds uncomfortable. Have you tried any workarounds to make it easier on your eyes?

User: I've tried turning down my monitor brightness, and I use the Night Shift mode on my Mac, but your app still looks really bright compared to everything else. I even tried a browser extension that inverts colors but it messed up the icons and graphs.

AI: Ah, so you've actually tried several things - Night Shift, lower brightness, even a color inverting extension that broke the visuals. So the issue isn't just brightness, it's that your app specifically stands out against everything else on the screen. How much is this affecting your actual work?

User: Honestly? Sometimes I just don't open the app at night. I'll make a note to check it in the morning instead. Which means I'm not capturing ideas when I actually have them.

AI: That's a significant impact. You're avoiding the app during your most productive creative time, and losing ideas because of it. Would you say this is affecting how much value you get from the product?

User: Definitely. I'm paying for it but probably only using it half as much as I could be.

Extracted Data from Conversation

| JTBD Element | Extracted Information |

|---|---|

| Situation | Working on projects late at night (9pm-midnight) after kids sleep |

| Motivation | Reduce eye strain during extended evening sessions; capture ideas when they occur |

| Workaround | Monitor brightness reduction, macOS Night Shift, color-inverting browser extension |

| Friction | App appears harsh compared to other apps; workarounds break UI elements; avoiding app entirely during productive hours |

| Impact | Using product 50% less than potential; losing ideas; questioning subscription value |

Auto-generated title: "Dark mode to support nighttime work sessions"

Auto-generated description: "User works on projects 9pm-midnight after kids sleep. Current bright interface causes eye strain and stands out harshly against other dark-mode apps. Has tried monitor brightness, Night Shift, and color-inverting extensions without success. Currently avoiding the app during evening creative sessions, resulting in lost ideas and reduced perceived value from subscription."

What Conversational AI Uncovers That Forms Miss

1. The Real Job to Be Done

The form said "dark mode." The conversation revealed: "I need to capture ideas during my most productive time without physical discomfort."

These are fundamentally different problems. One leads to a color palette change. The other leads to questions about when users are most creative and how the product fits into their workflow.

2. Current Workarounds and Their Failures

The user tried three separate solutions before submitting feedback. Each workaround failed in specific ways. This information tells the product team:

- The problem is real enough to motivate action

- Simple solutions don't work

- Integration with the OS matters

- Visual elements need native dark mode support, not just color inversion

3. Business Impact

"I'm paying for it but probably only using it half as much as I could be."

This single statement transforms a feature request into a retention risk. The user explicitly connected the missing feature to perceived subscription value. Product teams can now prioritize this request against churn risk, not just against other feature requests.

4. Usage Patterns

Evening creative sessions from 9pm to midnight. This reveals a user segment the team might not have considered: parents who work on creative projects after children sleep. Are there others? What other needs does this segment have?

How AI Conversational Feedback Works

Modern AI feedback systems follow a consistent pattern:

1. Persona-Based Engagement

Different feedback types trigger different AI personas:

- Feature Requests: Empathetic product manager who explores motivation and impact

- Bug Reports: Supportive technical specialist who gathers reproduction steps and context

2. Adaptive Questioning

The AI doesn't follow a script. It responds to what the user says:

- If the user mentions a workaround, it asks about friction

- If the user expresses frustration, it validates and explores impact

- If the user is brief, it asks clarifying questions

- If the user is detailed, it summarizes and confirms understanding

3. Structured Extraction

Throughout the conversation, the AI extracts structured data:

{

"situation": "Working late night after kids sleep",

"motivation": "Capture ideas during productive time",

"workaround": "Night Shift, browser extension, brightness",

"friction": "Workarounds break UI, app too bright",

"impact": "50% reduced usage, subscription value questioned",

"sentiment": "frustrated but engaged",

"urgency": "high - affecting core use case"

}

4. Professional Summary Generation

The AI generates a professional ticket that product teams can immediately act on:

- Clear, descriptive title

- Context-rich description

- Preserved conversation for reference

- Categorized by impact and urgency

The Conversation Matters Beyond Data

Storing the full conversation provides value beyond the extracted data:

Pattern Recognition

Product teams can search conversations for common phrases:

- "I tried but..."

- "I end up just..."

- "I wish I could..."

These reveal frustration patterns across users.

Voice of Customer

Marketing and support teams can quote users directly (with permission). Real language resonates more than sanitized summaries.

Training Data

Aggregated conversations reveal how users describe problems. This improves documentation, in-app guidance, and AI training.

Implementation Considerations

Conversation Length

Effective feedback conversations typically run 5-7 exchanges. Too short and you miss context. Too long and users disengage. The AI should wrap up gracefully when it has captured sufficient information.

Token and Cost Management

Conversational AI uses language models that have cost implications. Implement sensible limits:

- Maximum conversation length

- Graceful handoff to human support for complex issues

- Efficient context management to reduce token usage

Privacy and Data Handling

Conversations may contain personal information users wouldn't type into forms. Implement:

- Clear data handling disclosures

- Automatic PII detection

- User control over conversation storage

Quantitative Comparison

| Metric | Traditional Form | Conversational AI |

|---|---|---|

| Avg. words per submission | 15-30 | 150-300 |

| Context elements captured | 1-2 | 4-6 |

| Actionable insights | Low | High |

| User effort perception | Low | Variable (some find it easier) |

| Completion rate | Higher | Slightly lower |

| Quality per submission | Lower | Significantly higher |

The trade-off is clear: forms collect more submissions with less value each. Conversations collect fewer submissions with substantially higher value per submission.

When to Use Each Approach

Use Traditional Forms When:

- You need high-volume, low-friction collection

- The feedback type is simple and structured (ratings, yes/no)

- Users are in a hurry (checkout flows, quick polls)

- You already have strong user research channels

Use Conversational AI When:

- Quality matters more than quantity

- You need to understand motivation and context

- Users have complex needs to express

- You're building a product with high engagement expectations

- You want to reduce follow-up research needs

Key Takeaways

-

Forms capture requests; conversations capture context. The same "dark mode" request yields 23 words from a form and a rich narrative from conversation.

-

JTBD extraction reveals the real problem. Users don't want features; they want outcomes. Conversation uncovers outcomes.

-

Workarounds prove importance. When users describe failed solutions, they demonstrate the problem matters enough to work around.

-

Business impact emerges naturally. Users rarely state "this affects my subscription value" in form fields. In conversation, it flows naturally.

-

Full conversations become organizational assets. Searchable, quotable, analyzable dialogue serves multiple teams.

Conclusion

Traditional forms remain valuable for high-volume, structured data collection. But for understanding why users want what they want, conversational AI provides incomparably richer insights.

The choice isn't binary. Many products benefit from both: quick forms for simple feedback, conversational interfaces for complex requests and feature discussions.

The question for your product team: are you collecting requests, or understanding needs? The answer determines which approach serves you best.

This article explores the feedback collection philosophy behind UserVibesOS. For technical implementation details, see our documentation on AI-powered feedback collection.

Related Articles

Feedback Segmentation Strategies: Analyzing by Cohort, Plan, and Behavior

How to slice feedback data by user segments to uncover patterns invisible in aggregate. Turn noise into actionable insights for each customer type.

Why Traditional Feedback Forms Fail: The Case for Conversational AI

Traditional feedback forms collect data but miss context. Learn why conversational AI captures richer insights and how to implement it in your product.

AI-Powered Feedback Routing: Getting Issues to the Right Team

Use AI classification to automatically route feedback to product, support, engineering, or success teams. Eliminate manual triage and reduce response times.

Written by UserVibes Team

Published on January 9, 2026