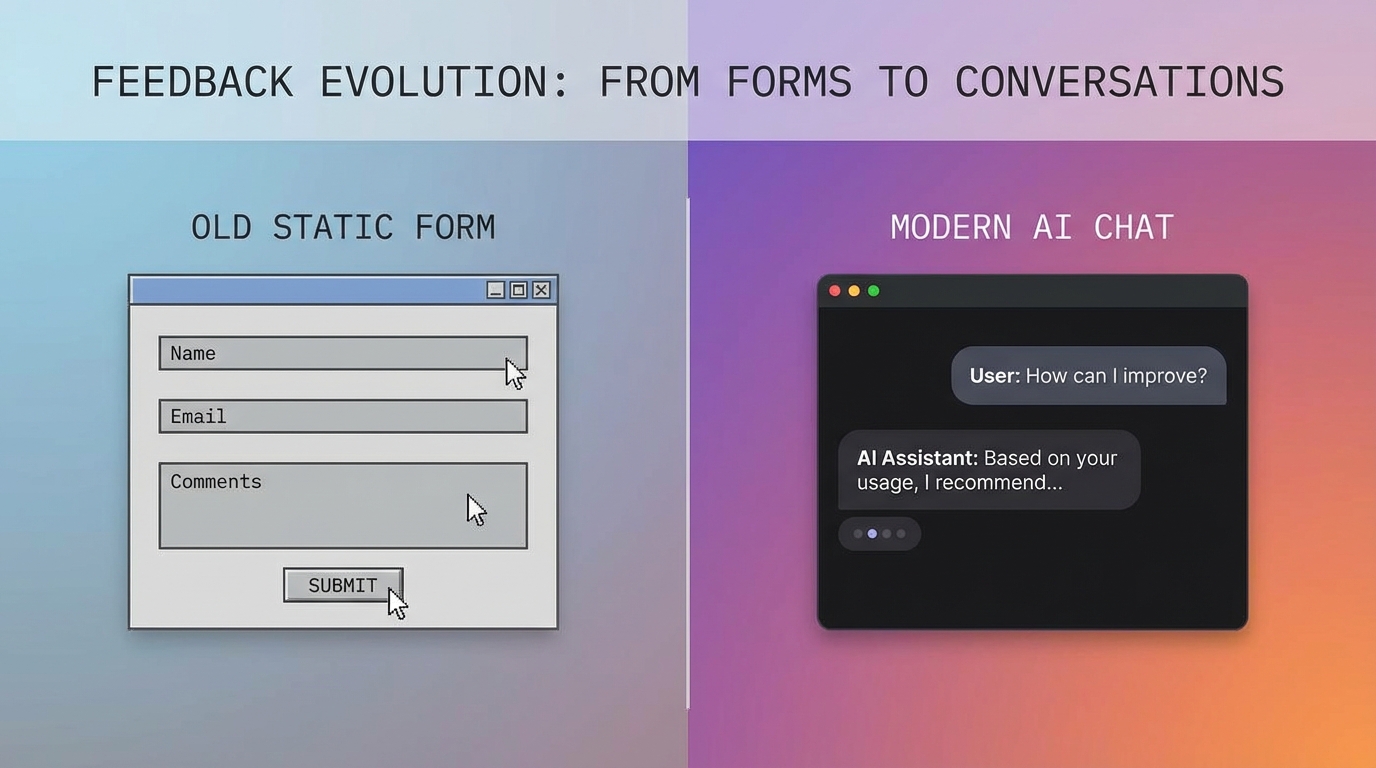

Why Traditional Feedback Forms Fail: The Case for Conversational AI

Traditional feedback forms collect data but miss context. Learn why conversational AI captures richer insights and how to implement it in your product.

Summary

Traditional feedback forms collect structured data but consistently miss the story behind user requests. Conversational AI interfaces use natural dialogue to uncover motivation, context, and workarounds that static fields cannot capture. This article examines why forms fall short and how AI-driven feedback collection yields dramatically richer insights for product teams.

The Problem with Traditional Feedback Forms

Every product team knows the frustration. You deploy a feedback form, responses trickle in, and you're left with data that raises more questions than it answers. "Add dark mode." "Make it faster." "The export feature is broken."

These responses tell you what users want but never why they want it. Traditional forms suffer from three fundamental limitations that no amount of field optimization can solve.

The Constraint of Predetermined Questions

Traditional forms force users into boxes you've already defined. You ask about features you're considering, problems you've anticipated, and satisfaction with workflows you understand. But users experience your product in ways you never imagined.

When a user writes "export is broken" in a text field, you don't know:

- What they were trying to export

- What format they needed

- What downstream system receives the export

- Whether a workaround exists that you could document

- How urgent this is to their workflow

A form can't ask follow-up questions. It collects the symptom and moves on.

Response Fatigue and Abandonment

Long forms get abandoned. Short forms miss context. This tension has no resolution within the traditional paradigm.

Research consistently shows that form completion rates drop precipitously after 3-4 fields. Yet meaningful feedback often requires understanding:

- User's role and use case

- Specific scenario triggering the feedback

- Impact on their workflow

- Attempted workarounds

- Priority relative to other issues

Fitting this into 3 fields is impossible. Spreading it across 10 fields guarantees abandonment.

The Missing Emotional Context

Text fields capture words but not tone. A user writing "this feature would be nice" and one writing the same words while frustrated after a failed demo are providing very different signals. Forms flatten this emotional dimension into undifferentiated text.

How Conversational AI Changes Everything

Conversational interfaces transform feedback collection from interrogation into dialogue. Instead of presenting a wall of fields, AI engages users naturally, adapting its questions based on responses.

Dynamic Follow-Up Questions

When a user mentions "export issues," a conversational AI can probe deeper:

User: The export feature isn't working for my use case.

AI: I'd like to understand your export needs better. What format are you trying to export to, and what system receives that export?

User: I need CSV exports for our data warehouse. The current JSON format requires manual conversion.

AI: That's helpful context. How often do you need to run these exports, and roughly how much time does the manual conversion add?

User: Daily. It adds about 30 minutes each time because I have to clean up the nested objects.

This single exchange reveals more than a dozen form submissions. You now know the format needed, the destination system, the frequency, and the concrete time cost. A form would have captured "export doesn't work" and nothing more.

Natural Language Reduces Friction

Users don't think in form fields. They think in stories, frustrations, and hopes. Conversational interfaces let them express feedback naturally, then AI structures it for your team.

Instead of forcing users to categorize their own feedback (Is this a bug? Feature request? Question?), conversation lets them simply describe their experience. The AI handles categorization, severity assessment, and routing.

Emotional Intelligence Through Tone Analysis

Modern language models detect frustration, enthusiasm, confusion, and urgency in text. A conversational system can recognize when a user is genuinely blocked versus mildly inconvenienced, and prioritize accordingly.

This isn't about sentiment scores—it's about understanding that "I guess the workaround is fine" from a power user who's been loyal for years carries different weight than the same words from a trial user on day one.

The Jobs-to-Be-Done Framework in Practice

Conversational AI excels at uncovering the job users are trying to accomplish—not just the feature they're requesting. This aligns perfectly with the Jobs-to-Be-Done framework that drives successful product development.

From Feature Request to Underlying Need

Consider this traditional form submission:

Feature Request: Add calendar integration

A product team might spend weeks building Google Calendar sync, only to discover users actually needed deadline reminders. The form captured the proposed solution, not the underlying job.

A conversational approach uncovers the real need:

User: I wish this had calendar integration.

AI: Calendar integration could mean several things. What would you want to happen between our app and your calendar?

User: I keep missing deadlines because I forget to check the dashboard.

AI: So you'd like to be notified about upcoming deadlines? Would notifications work, or do you specifically need calendar events?

User: Actually, notifications would be perfect. I just need a reminder the day before things are due.

The user didn't need calendar integration—they needed deadline reminders. The conversational approach saved weeks of development on the wrong feature.

Discovering Workarounds

Users develop ingenious workarounds that reveal both pain points and potential solutions. Forms rarely surface these; conversations do naturally.

AI: You mentioned the reporting is challenging. How are you currently handling that?

User: I export everything to Excel, run a pivot table, then paste it back into a Google Doc for my manager.

AI: That sounds time-consuming. How often do you go through this process?

User: Every Monday. It takes about an hour.

You've just discovered that users spend 52 hours annually on a workaround. That's a compelling data point for prioritizing better reporting—and the Excel/pivot table workflow hints at exactly what the improved reports should show.

Implementing Conversational Feedback

Moving from forms to conversations doesn't require rebuilding your entire feedback infrastructure. The key is starting with high-value touchpoints.

Embedded Widget Approach

The most effective implementation embeds a conversational widget directly in your application. When users click "Give Feedback," they enter a chat interface rather than a form.

Key advantages of embedded widgets:

- Contextual awareness: The widget knows which page the user is on, what they were doing, and their account history

- Lower friction: Chat feels lighter than forms, even when gathering more information

- Immediate value: Users get acknowledgment and sometimes instant answers

Structured Data Extraction

Behind the natural conversation, AI extracts structured data for your systems:

| Extracted Field | Value |

|---|---|

| Category | Feature Request |

| Component | Reporting |

| Severity | Medium |

| User Segment | Enterprise |

| Workaround Exists | Yes |

| Time Impact | 1 hour/week |

| Sentiment | Frustrated but loyal |

Your team gets the structured data they need for prioritization while users enjoy a natural interaction.

Handling Edge Cases

Conversational systems need guardrails for edge cases:

- Off-topic responses: Gracefully redirect while acknowledging the user's point

- Abusive content: Detect and handle appropriately without escalation

- Technical support masquerading as feedback: Route to support when users need immediate help

- Circular conversations: Recognize when enough information is gathered and wrap up

Measuring Success

Transitioning to conversational feedback requires new metrics beyond form completion rates.

Quality Metrics

- Actionability rate: Percentage of feedback items with enough context to act on

- Follow-up reduction: How often product teams need to contact users for clarification

- Insight density: Unique insights per feedback item

- Time to understanding: How quickly teams can assess and prioritize feedback

User Experience Metrics

- Completion rate: Users who finish the conversation (typically higher than forms)

- Return rate: Users who provide feedback again

- Satisfaction with process: How users rate the feedback experience itself

- Time invested: Users often spend more time with conversations but report higher satisfaction

Key Takeaways

-

Forms capture symptoms, conversations capture causes: The ability to ask follow-up questions transforms vague requests into actionable insights with full context.

-

Dynamic questioning adapts to users: Instead of one-size-fits-all fields, conversational AI tailors its questions based on each user's responses and situation.

-

Natural language reduces friction: Users express feedback more completely when they can speak naturally rather than fitting thoughts into predefined fields.

-

AI extracts structure from conversation: Behind the natural dialogue, systems capture the categorized, prioritized data product teams need.

-

Jobs-to-Be-Done emerges naturally: Conversations uncover the underlying need, not just the proposed solution, leading to better product decisions.

-

Workarounds reveal opportunity: Discussion naturally surfaces the creative solutions users have developed, highlighting both pain points and potential features.

-

Implementation can be incremental: Start with high-value touchpoints like post-interaction feedback, then expand based on results.

Ready to transform how you collect user feedback? Try User Vibes to see conversational AI feedback in action.

Related Articles

From Feature Request to Shipped: How AI Extracts the 'Why' Behind User Feedback

Discover how AI-powered conversational feedback extraction transforms raw user requests into strategic product insights by uncovering situation, motivation, workaround, and friction using the Jobs-to-be-Done framework.

Feedback Segmentation Strategies: Analyzing by Cohort, Plan, and Behavior

How to slice feedback data by user segments to uncover patterns invisible in aggregate. Turn noise into actionable insights for each customer type.

Micro-Surveys: The Art of the Single Question

How single-question surveys achieve 3-5x higher response rates while delivering actionable insights. Master timing, question design, and progressive disclosure.

Written by User Vibes OS Team

Published on January 10, 2026